How Duolingo Skyrocketed Its Growth: A/B Testing Explained! 🚀

Also, let's understand what the 37% rule is and how the ‘scarcity effect’ impacts our perception.

Hello, curious minds and data enthusiasts!

Welcome to our 5th edition of DataPulse Weekly, where we unravel the magic behind data and its impact on our daily lives.

Each newsletter promises to be a journey through the fascinating intersections of data, technology, and human experiences. Whether you're an analyst, a tech enthusiast, or simply curious about how data shapes our world, you're in the right place. Let’s dive straight into today’s Data Menu -

Today’s Data Menu

Data Case Study: How Duolingo Skyrocketed Its Growth: A/B Testing Explained!

Metric of the Week: Conversion Rate

Visualization Spotlight: Conversion Rate by Industry

Human Bias Focus: Scarcity Effect

Data Nugget: 37% Rule

Data Case Study:

We've all heard about Duolingo and the viral memes surrounding it by now, haven't we? It’s the app that turned language learning from a chore into an engaging, fun-filled journey. It has grown from 3 million to 575 million users in the last few years. But even the most popular platforms face their set of challenges, and Duolingo was no exception.

Duolingo faced a critical challenge: a significant drop-off in users signing up after downloading the app or visiting the website. The initial instinct might be to prompt users to sign up immediately, capitalizing on their initial interest. However, Duolingo decided to experiment: Let’s allow the users to experience the product before asking them to commit. But how does one go about experimenting with this? Enter A/B experiments - the heart of growth.

A/B experiments have the following steps:

Define Your Goal: Pinpoint the specific improvement you're aiming for. Duolingo focused on boosting downloads to app sign-ups.

Craft a Testable Hypothesis: Frame your assumption in a way that's directly testable. Take Duolingo's approach as an example: "Delaying the sign-up prompt will increase daily active users."

Select One Variable to Test: Isolate a single element to modify, ensuring you can attribute any changes in outcomes to this variable. Duolingo focused on the timing of the sign-up prompt as their variable.

Split Your Audience: Divide your audience into two groups—control and treatment—having the same characteristics, to eliminate bias. In A/B testing, "control" is the original version, while "treatment" refers to the version with the variable changed for comparison. These steps ensure that you have an equally similar audience in both groups. For instance: Both groups should have an equal percentage of iOS users. Duolingo allowed the control group to see the same old flow, while the treatment group experienced a delayed sign-up.

Analyze the Outcomes: Compare the performance of both groups using statistical methods (or lift calculation) to see which version is better.

Initial Setup

This case study outlines the actual results of Duolingo's A/B testing; however, due to the lack of access to raw data, we'll use hypothetical numbers to illustrate their testing strategy and its potential effectiveness.

Assume Duolingo had 1 million new downloads per month, with a 10% sign-up rate, resulting in 100k new sign-ups.

Let’s take the treatment group as 100k users, with the control group comprising the rest, which is 900k. Why limit the treatment group to just 100k users? When exploring new changes, it's wise to conduct a small-scale experiment with a sufficient sample size. This approach ensures the effectiveness of the new change, minimizing risk before a broader rollout.

A/B Test #1: Delayed Sign-Up

By delaying the sign-up prompt and allowing users to engage with a few lessons, Duolingo hypothesized that experiencing the app's value firsthand would encourage more users to sign up.

Experiment Outcome:

Control Group: Downloads to app sign-up rate remains at the original 10%.

Treatment Group: Observed a 12% sign-up rate after implementing delayed sign-up, a 20% increase.

Lift Calculation: The increased conversion rate with 1 million users will result in 120k total sign-ups, translating to 20k additional sign-ups and potential DAUs.

That’s a significant improvement. This small-scale experiment could now be expanded to all Duolingo users.

A/B Test #2: Hard Walls vs Soft Walls

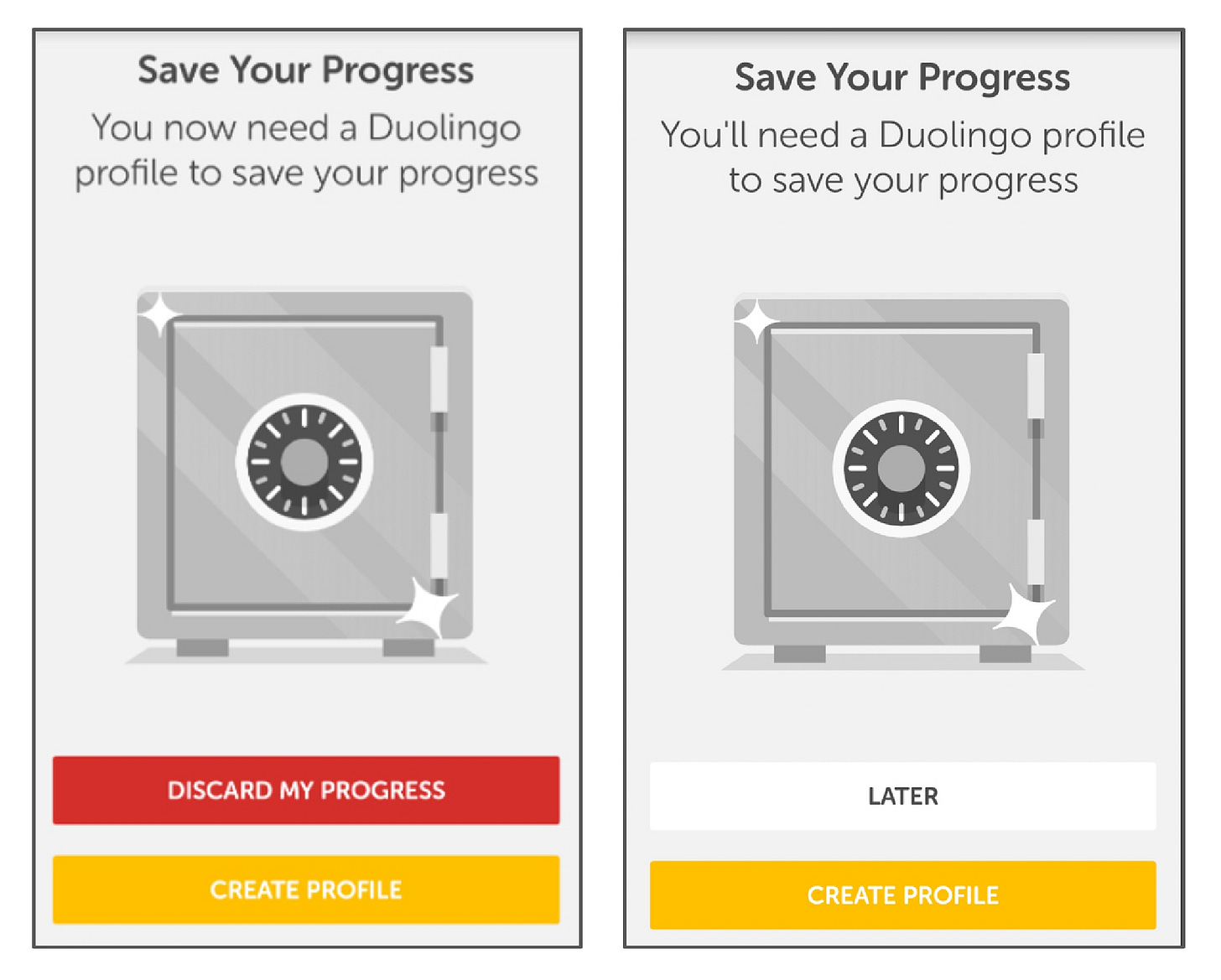

Following the success of the initial test, Duolingo iterated on when to present the sign-up prompts. Duolingo's interface featured a large, attention-grabbing red button at the bottom of the screen, boldly labeled 'Discard my progress'—an explicit nudge for users to sign up immediately, effectively serving as a hard wall. The image below shows the concept of hard vs soft wall: the left one features a hard wall for sign-up, while the right one introduces a soft wall.

The team theorized that users might be instinctively drawn to press the most visually prominent button, even if they were still interested in the app. This could lead to potential learners prematurely exiting the sign-up process.

Acting on this hypothesis, Duolingo decided to replace the bold red button with a subtler one labeled 'Later', a soft wall, resulting in another 8.2% increase in DAUs

Key Takeaway:

Duolingo's experience underscores the importance of testing and iterating on product experience. The key insight is to identify the optimal moment when users see the value in your product and are most likely to sign up. By strategically placing soft walls at points of engagement and only introducing a hard wall after several interactions, Duolingo managed to align the sign-up process with the user's discovery of the product's value, significantly boosting sign-ups. Beyond sign-up optimizations, A/B testing has tremendous applications across various domains:

Product Features: Testing new product features or changes

Website Layouts: Comparing menu placements to reduce bounce rates.

Marketing Messages: Evaluating email subject lines for higher open rates.

Pricing Strategies: Assessing subscription plans for optimal profitability.

Have you encountered any fascinating A/B tests? We'd love to hear about them—please share your experience in the comments!

This naturally leads us to our next section, where we explain how conversion rates can be calculated to evaluate performance.

Metric of the Week: Conversion Rate

Conversion Rate measures the percentage of users who take a desired action, such as making a purchase, signing up for a newsletter, or completing a form, out of the total number of users who interacted with a particular webpage, advertisement, or campaign.

So why is Conversion Rate so important? Well, put simply, it provides valuable insights into the effectiveness of your marketing efforts, website design, and overall user experience.

Now, you might be wondering, how do we calculate Conversion Rate? It's quite straightforward! Simply divide the number of conversions (e.g., sign-ups) by the total number of visitors and multiply by 100 to get the percentage. At times, it's crucial to consider potential delays in conversion. In certain businesses, purchases may not occur on the day of the first interaction, leading to a lag in conversion.

Conversion Rate = (Number of Conversions / Total Number of Visitors) * 100

With this formula in hand, you can track Conversion Rates across various channels, campaigns, and periods to identify trends, spot opportunities for improvement, and optimize your strategies for maximum impact.

In summary, Conversion Rate is a vital metric for evaluating the performance of your product design, website, campaigns, and advertisements. By understanding and harnessing the power of Conversion Rate, you can make data-driven decisions that drive business success.

Check the insightful visualization on the Conversion Rate below.

Visualization Spotlight:

Conversion Rate by Industry (Landing Page Visitors to Lead Generation)

Learn more about when to use Average and Median here.

Human Bias Focus: Scarcity Effect

Did you know there are more than 180 ways your brain can trick you? These tricks, called cognitive biases, can negatively impact the way humans process information, think critically and perceive reality. They can even change how we see the world. In this section, we'll talk about one of these biases and show you how it pops up in everyday life.

Imagine you're given two jars of cookies.

One jar is almost full of ten cookies, and the other has two identical cookies.

Which jar’s cookies do you think seem more desirable?

This question was at the heart of an interesting experiment in 1975 by researchers Worchel, Lee, and Adewole. They wanted to understand how the idea of something being rare or scarce affects how much people want it.

Participants in the study were asked which cookies they thought were more desirable and valuable, not knowing what the researchers were really looking for. Even though the cookies looked and tasted the same, the ones in the jar with only two were rated as more desirable and valuable by most people. This finding showed how just making something seem rare can make it seem more important or valuable to us, highlighting the powerful effect that scarcity has on our choices and desires.

Here are a few additional examples:

Remember flash sales of Xiaomi phones when they initially launched on Flipkart? That’s leveraging scarcity to boost demand, driving quick sales, and enhancing exclusivity.

Popular brands like Nike and Adidas often release limited quantities of new sneaker designs. Despite being just shoes, their limited availability turns them into must-have items.

Remember, understanding any bias is the first step to overcoming its impact on our decision-making, and sometimes, to exploiting them.

Data Nugget: 37% Rule

Imagine you are part of a hiring team tasked with hiring the best secretary from a pool of candidates. You interview candidates sequentially. After each interview, you must either reject them or offer the position, thus closing the hiring process.

You're faced with the challenge of determining when exactly to commit to one of the candidates. Committing too early might mean overlooking more qualified candidates who appear later, but if you wait too long to make a decision, you might find that the best candidates have already been dismissed. This decision-making dilemma, common in sequential selection processes, highlights the challenge of pinpointing the perfect moment to make a commitment without full knowledge of what future options might bring.

This situation is central to a mathematical challenge known as the "Secretary Problem."

The solution involves the 37% rule, which suggests that you should initially evaluate and pass on the first 37% of the candidates. This phase isn't about identifying the ideal candidate but rather about establishing a standard of quality among the candidates. After you have assessed 37% of your options, the rule recommends choosing the next candidate who surpasses all those you've previously considered. This method, based on optimal stopping theory, is designed to enhance your chances of making the best possible hire.

Interestingly, this approach gives you a 37% chance of selecting the best candidate, which coincidentally matches the percentage of people you explore before committing. For a more in-depth exploration, you may read about this problem here.

The 37% rule also applies in various sequential decision-making scenarios. Whether you're selecting a rental property or searching for a life partner, this rule advises spending the initial 37% of your search evaluating options without committing. This strategy helps balance gathering enough information with making timely decisions, ensuring well-informed choices in areas as diverse as real estate and personal relationships.

However, it's worth noting that while the 37% rule offers a neat mathematical strategy, life isn't a spreadsheet—especially in matters of the heart. So, although it's useful in decision-making, remember that if you've found the perfect one, it's time to take the leap.

That wraps up our newsletter for today! We've simplified intricate data concepts and will continue to do so in future editions. If you found this helpful, please consider subscribing and sharing it with someone who would appreciate it—it motivates us to go the extra mile to create more such content. And, if you are facing a sequential decision-making challenge, such as finding a new home, remember the 37% rule!

Reference - Duolingo Image, Conversion Rate Graph

One again, great article team! The quality of writing and presentation is significantly improving with each new blog. Kudos, keep up the good work 👏

I love how you explain these concepts clearly and without unnecessary jargon 🤞