There are lies, damn lies, and statistics! 📊🤥

Also, let's understand how we are influenced by outcome bias and average of averages.

Hello, data enthusiasts and curious minds!

Welcome to our 7th edition of DataPulse Weekly, where we unravel the magic behind data and its impact on our daily lives.

Each newsletter promises to be a journey through the fascinating intersections of data, stories, and human experiences. Whether you're an analyst, or simply curious about how data shapes our world, you're in the right place.

Bonus: Look forward to entertaining memes later in this issue! Let us know in the comments if you’d like to see more in upcoming editions.

Let’s dive straight into today’s Data Menu -

Today’s Data Menu

Data Case Study: How Statistics Can Fool Us?

Metric: CLV

Meme of the Week

Human Bias Focus: Outcome Bias

Data Nugget: Average of Averages

Data Case Study:

"I will start working out tomorrow." That's probably a lie.

"I will start working out, reading books, and waking up early tomorrow." Now, that's a damn lie.

Alright, maybe we're just stretching the truth a bit, but isn't that the tale of most of our tomorrows?

However, there is another kind of lie that's not about what we say to ourselves.

Mark Twain once said, "There are lies, damn lies, and statistics." Today, we're going to talk about how numbers and statistics can sometimes trick us.

They might have serious consequences, like receiving the wrong medical treatment. To understand this better, let's take a real-life study involving two surgery options for kidney stones.

Here are the two treatment options for kidney stones:

Treatment A: Open surgical procedures.

Treatment B: Closed surgical procedures.

Now, let's delve into the success rates based on the size of the stone and the type of treatment.

Findings:

Overall: Combining all cases, Treatment B appears more effective with an 83% success rate, compared to Treatment A’s 78%.

Small Stones: Treatment A shows higher effectiveness (93%) than Treatment B (87%).

Large Stones: Treatment A also outperforms Treatment B (73% versus 69%).

Wait, what? Yes, this is an instance of Simpson’s Paradox, where a trend observable within individual groups reverses when the groups are combined.

Two factors are at play here:

Distribution: Doctors often allocate the more effective Treatment A for severe cases involving large stones and the less effective Treatment B for milder, small stone cases. Consequently, most data falls into the larger groups (groups 2 and 3), overshadowing the smaller groups (1 and 4).

Success Rate: The severity of the condition, rather than the treatment type, primarily determines success rates. Hence, patients with large stones treated with Treatment A fare worse than those with small stones treated with Treatment B.

The paradoxical outcome arises because the size of the kidney stones significantly impacts treatment efficacy, overshadowing the advantages of Treatment A. Essentially, Treatment B seems more effective largely because it is frequently used for smaller, less severe cases, which are inherently easier to treat.

Key Takeaway:

When evaluating outcomes or making decisions, always dig deeper, similar to how data is analyzed at a group level in studies. Relying only on surface-level results can be misleading. Understanding the context and underlying variables allows you to make informed choices that accurately reflect the complexities of any situation.

This brings us to our next section where we explain a key data metric - CLV

Metric of the Week: CLV

CLV, or Customer Lifetime Value, represents the total revenue a business can reasonably expect from a single customer account throughout the business relationship. This metric is crucial in understanding how much value each customer brings over time.

Let's examine a hypothetical scenario for an online subscription service like Netflix. Suppose the average monthly subscription fee is $15, and the average customer retention period is three years:

CLV = (Average Monthly Subscription Fee × 12 months × Average Customer Lifespan in Years) = ($15 × 12 × 3) = $540 CLV

Why It Matters:

Customer Lifetime Value (CLV) is essential for evaluating how much revenue a customer can generate over their relationship with a company. It helps businesses determine how much they should invest in customer acquisition and retention strategies. A higher CLV indicates the potential for greater profitability, guiding companies in optimizing their marketing spend and enhancing customer relationships for sustained revenue growth.

💡 Remember that building a data mindset is effective only when we focus on solving data-related problems. The below question is designed for exactly this kind of practice. We will address this in the last section of this newsletter.

Food for thought:

Imagine you're in charge of HR analytics for a company with offices in New York and San Francisco. You're tasked with analyzing average salaries for your company's offices in New York ($80,000) and San Francisco ($120,000). Should we calculate an overall average to understand the average salaries employees make?

Meme of the Week:

Human Bias Focus: Outcome Bias

Did you know there are more than 180 ways your brain can trick you? These tricks, called cognitive biases, can negatively impact the way humans process information, think critically and perceive reality. They can even change how we see the world. In this section, we'll talk about one of these biases and show you how it pops up in everyday life.

On September 24, 2007, the cricket world watched as the inaugural T20 World Cup came down to a thrilling finale between arch-rivals India and Pakistan. The stakes couldn't have been higher, and the atmosphere was electric. The match boiled down to the final over with Pakistan needing just 13 runs to win.

In a decision that stunned many, MS Dhoni, the captain of the Indian team, handed the ball to Joginder Sharma, a relatively inexperienced bowler, for the final over. This was a high-risk move given Joginder's lack of experience in such high-pressure situations, contrasting with other seasoned bowlers in the team.

The tension peaked as the game neared its climax. In a pivotal moment, the opposing player attempted a daring move to clinch the victory but misjudged his play, sending the ball directly to a defender. This error sealed the team's fate, and the rest is history; India won the World Cup.

This remarkable incident from the 2007 T20 World Cup is a classic example of outcome bias, where the success of a decision—no matter how unconventional or risky—is judged solely by its result. In this case, Dhoni’s choice to use Joginder Sharma, despite his inexperience, turned out to be successful, leading many to view it as a brilliant move. However, had the decision led to India losing the match, it might have been harshly criticized as poor judgment.

For instance:

Say, a company decides to invest heavily in a new technology that is predicted to fail by most analyses due to high-risk factors. The technology unexpectedly succeeds due to unforeseen market changes, leading the decision to be praised, despite the poor initial analysis and high-risk factors involved. This praises the lucky outcome, not the decision-making process.

These examples demonstrate how outcome bias can affect our perception in various fields, making us prone to overlook the quality of the decision-making process when the outcome is favorable. It's crucial to assess decisions based on the information and context available at the time, rather than the hindsight provided by outcomes, to avoid repeating potentially risky behaviors that just happened to work out once.

Remember, understanding any bias is the first step to overcoming its impact on our decision-making. Learn about more biases that we covered in our previous newsletters here -

This brings us to the last section of our newsletter.

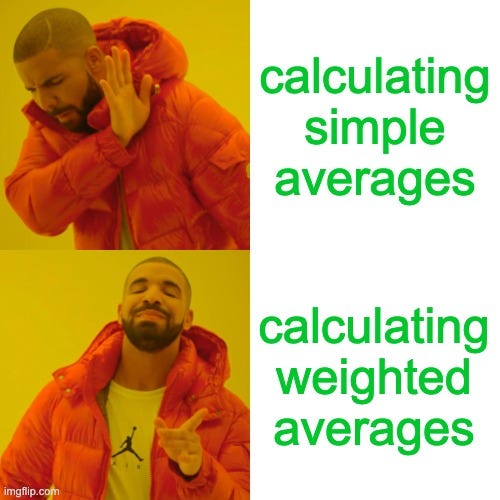

Data Nugget: Average of Averages

Exploring the vital role of data in decision-making, let's revisit our earlier question: Should we calculate an overall average to understand what employees earn across different offices? The short answer is no. This approach overlooks a critical element—the number of employees in each location.

New York Office: Home to 300 employees, each earning an average salary of $80,000.

San Francisco Office: Comprises 30 employees, with an average salary of $120,000.

If you take a simple average of these two average salaries: Average Salary= (80,000+120,000)/2 =$100,000

This method would suggest the average salary across the company is $100,000, potentially influencing compensation strategies. However, this average of averages does not account for the significant disparity in the number of employees between the offices.

Considering that the New York office has ten times the number of employees as the San Francisco office, its average salary should heavily influence the overall average.

The appropriate method here is a weighted average: Weighted Average Salary=(300×80,000+30×120,000)/330=$83,636

This weighted calculation reveals a more accurate average salary of $83,636, substantially lower than the $100,000 derived from a simple average of averages.

This example illustrates the misleading potential of averaging averages, which can treat unequal groups as if they are of equal significance. This is also applicable when taking an average of ratios. Next time you encounter a problem involving averages, ask yourself: are you taking a weighted average or a simple average?

Also, read about the difference between Average and Median.

That wraps up our newsletter for today! We've simplified intricate data concepts and will continue to do so in future editions. If you found this helpful, please consider subscribing and sharing it with someone who would appreciate it—it motivates us to go the extra mile to create more such content.

The Simpson's paradox blew my mind. Gonna read more about it. Once again, great article team. Keep going 👏

Haha, loved the memes in this edition! Keep them coming! :)